What is responsible AI?

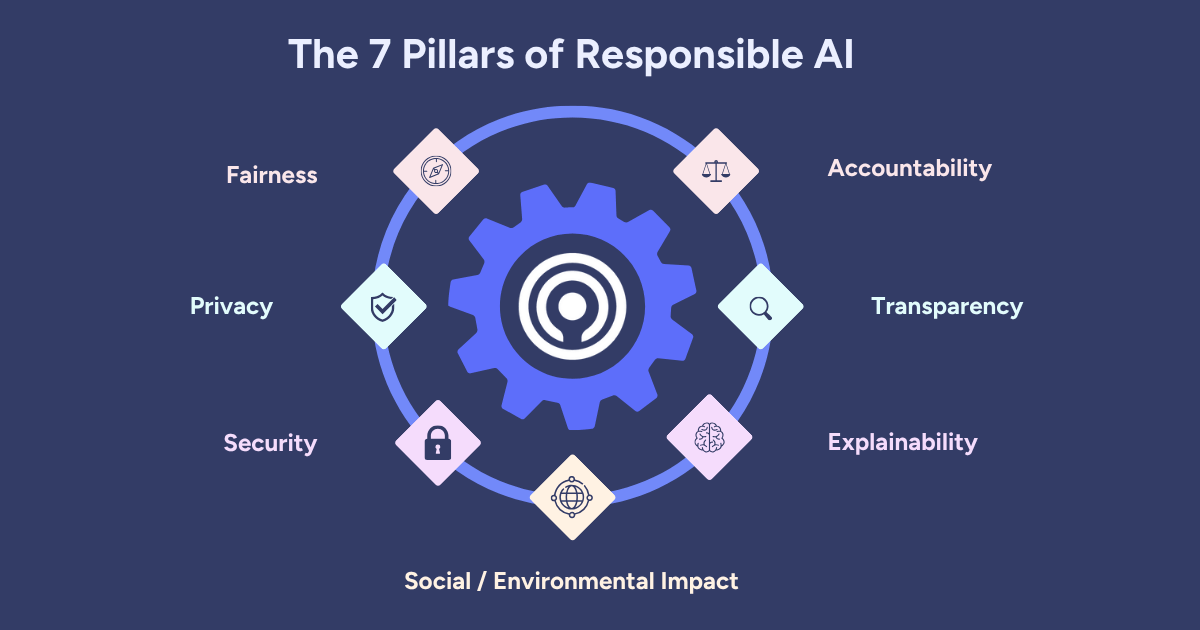

Responsible AI encompasses the ethical, legal, and societal considerations involved in the design, development, and deployment of artificial intelligence (AI). It emphasizes not only the prevention of harm but also the proactive pursuit of better business outcomes. The seven key pillars of responsible AI are transparency, accountability, explainability, security, privacy, fairness, and social/environmental impact.

What is responsible AI?

Responsible AI represents a commitment to deploying artificial intelligence technologies in ways that align with ethical principles, human rights, and societal good. With AI transforming industries from healthcare and finance to manufacturing and logistics, the need for responsible practices is no longer optional.

Responsible AI in action

At a fast-scaling tech company, an AI-powered content moderation tool is rolled out. To avoid inadvertently silencing underrepresented voices, the development team audits training data and integrates real-time bias detection.

A hospital system leverages predictive models to anticipate patient deterioration. These models are trained on diverse datasets and offer explainable outputs, enabling clinicians to understand, trust, and act on the insights.

Meanwhile, a global financial services provider utilizes AI for fraud detection, deploying models that are continuously monitored for false positives and fairness across demographic groups.

Each of these scenarios reflects a core principle: innovation must not outpace responsibility.

Defining responsible AI: Principles and frameworks

More than just a set of practices, responsible AI is, in fact, a mindset. It centers on the belief that AI should augment human capabilities while protecting societal values. Here is a list of key principles.

Transparency and explainability

Models should offer insight into how decisions are made. This is particularly critical in regulated environments such as healthcare and finance, where black-box outputs can erode trust or lead to audit failures.

Fairness and bias reduction

AI systems must be trained on diverse, representative data and subjected to fairness audits. Techniques such as adversarial debiasing and reweighting are increasingly standard.

Accountability

Clear governance structures must define who is responsible for model performance, decisions, and remediation.

Privacy and security

Responsible AI prioritizes data protection, supporting practices like differential privacy, data minimization, and encrypted model training.

Frameworks such as the EU AI Act, NIST’s AI Risk Management Framework, and OECD AI Principles are guiding the adoption of AI across jurisdictions.

What are the key benefits of responsible AI?

Organizations are increasingly recognizing responsible AI as a differentiator, not a drag on innovation, for the following reasons.

Enhanced trust and user adoption

When users understand and trust AI outputs, adoption increases across functions, from frontline teams to executive leadership.

Regulatory readiness

Proactively aligning with emerging regulations minimizes compliance risks and future-proofs AI investments.

Improved model performance

Fair, transparent models are ethical, but interestingly, they also often outperform biased or opaque systems by avoiding data distortions and overfitting.

Stronger brand equity

In an era of rising tech scrutiny, brands that champion responsible AI stand out to customers, investors, and talent.

What are some roadblocks to implementing responsible AI?

Despite growing awareness, operationalizing responsible AI remains a significant challenge.

Balancing speed with oversight

Agile development can sometimes sidestep ethical reviews. Embedding ethics into the AI lifecycle from model conception to post-deployment monitoring requires cultural and procedural shifts.

Tackling data bias and quality issues

Biased data leads to biased models. Organizations must:

- Conduct rigorous data audits

- Implement fairness-aware preprocessing

- Use synthetic data to augment underrepresented populations

Talent and tooling gaps

Responsible AI demands multidisciplinary teams with expertise in ethics, data science, legal compliance, and product development. It also requires platforms that support inline data wrangling, explainable AI, and real-time analytics for full transparency.

What industries are using responsible AI methods?

As responsible AI gains traction across the enterprise, its impact is being felt most tangibly in industries where decisions carry significant ethical, financial, or human consequences.

Healthcare

Hospitals can enhance patient care through data-driven clinical decision-making. Responsible AI in practice:

- Transparency: Use AI to explore patient data and understand care patterns, fostering explainable outcomes.

- Accountability: Audit decisions that are supported by visual analytics with data provenance tracked throughout.

- Ethical use of AI: Apply AI insights to optimize outcomes without compromising patient rights or safety.

Learn more about AI use in Healthcare from NUHS.

Finance

Financial services companies can accelerate customer segmentation and deliver personalized services. Responsible AI in practice:

- Transparency and explainability: Use visual analytics to explore customer data and ensure that segmentation logic is clear and actionable.

- Bias reduction: Identify and correct potential biases before they affect pricing or service levels by interrogating data patterns visually

- Accountability: Make data models and insights accessible to business stakeholders, fostering oversight and governance over AI-driven decisions.

Learn more about AI use in FSI from AA Ireland.

Energy

Energy companies can streamline production monitoring and improve decision-making across offshore platforms. Responsible AI in practice:

- Transparency: Visualize and interact with real-time operational data, ensuring that AI-driven recommendations are fully explainable and grounded in contextual understanding.

- Accountability: Make operational decisions based on AI insights that are traceable, with audit-friendly workflows that support governance requirements.

- Sustainability: Optimize energy output and detect anomalies early to align your data strategy with environmental and safety goals.

Learn more about AI use in Energy from Liberty Energy.

Key takeaways

Responsible AI is both a moral imperative and a business necessity. It enables companies to unlock the power of AI while safeguarding human rights, ensuring fairness, and building long-term trust. From healthcare and finance to energy and manufacturing, responsible AI is no longer a future aspiration; it’s today’s competitive advantage.

Related resources

When humans are kept in the loop of AI workflows, it can help increase transparency and trust—mitigating possible risks with bias, data privacy violations, and changing data regulations.

Developing AI that’s fair, transparent, and accountable is a strategic imperative for modern businesses. Discover the 7 pillars of responsible AI.

Take advantage of the latest advancements in AI and ML with Spotfire. Discover insights and make smarter decisions faster with embedded AI and a GenAI extension.